MLE-STAR is a cutting-edge engineering agent for machine learning

- August 6, 2025

- 0

The cutting-edge machine learning engineering agent known as MLE-STAR is capable of producing outstanding results while simultaneously automating a variety of machine learning tasks across a variety of data modalities. High-performance applications for a wide range of real-world scenarios, such as tabular classification and image denoising, have been fueled by the rise of machine learning (ML).

However, crafting these models remains an arduous endeavor for machine learning engineers, demanding extensive iterative experimentation and data engineering. Recent research has focused on using large language models (LLMs) as agents for machine learning engineering (MLE) in order to simplify these strenuous workflows. By capitalizing on their inherent coding and reasoning skills, these agents conceptualize ML tasks as code optimization challenges. After that, they look into possible code solutions and, in the end, use datasets and a given task description to create executable code, like a Python script. Despite their promising initial strides, current MLE agents face several limitations that curtail their efficacy.

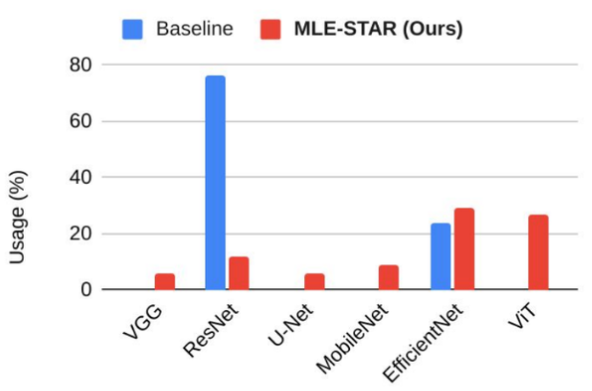

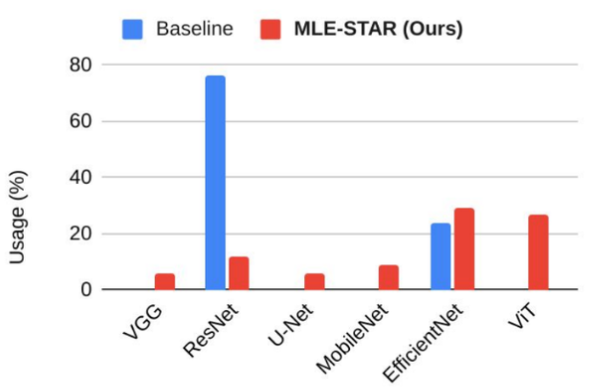

First, their heavy reliance on pre-existing LLM knowledge often leads to a bias towards familiar and frequently used methods (e.g., the scikit-learn library for tabular data), overlooking potentially superior task-specific approaches. Additionally, these agents frequently employ an exploration strategy that simultaneously modifies the entire code structure for each iteration. Because they lack the capacity for in-depth, iterative exploration within particular pipeline components, such as exhaustively experimenting with various feature engineering options, this frequently causes agents to prematurely shift focus to other stages, such as model selection or hyperparameter tuning. In our recent paper, we introduce MLE-STAR, a novel ML engineering agent that integrates web search and targeted code block refinement.

MLE-STAR, in contrast to alternatives, begins its ML challenges by searching the internet for appropriate models to establish a solid foundation. The foundation is then carefully enhanced by testing the most crucial parts of the code. For even better outcomes, MLE-STAR also makes use of a novel strategy for combining several models. This strategy is very effective; it outperformed the alternatives by a significant margin and won medals in 63% of the Kaggle competitions in MLE-Bench-Lite. Introducing MLE-STAR

To generate initial solution code, MLE-STAR uses web search to retrieve relevant and potentially state-of-the-art approaches that could be effective for building a model.[ 1] MLE-STAR extracts a specific code block that represents a distinct ML pipeline component, such as ensemble building or feature engineering, to improve the solution.

It then focuses on looking into strategies that are specific to that part, using feedback from previous attempts as a guide. To identify the code block with the most significant impact on performance, MLE-STAR conducts an ablation study that evaluates the contribution of each ML component. Modifying various code blocks, this refinement process is repeated. In addition, we present a novel approach to ensemble generation. MLE-STAR starts by offering several potential solutions. Then, instead of relying on a simple voting mechanism based on validation scores, MLE-STAR merges these candidates into a single, improved solution using an ensemble strategy proposed by the agent itself. Based on how well the previous strategies performed, this ensemble strategy is refined iteratively.