The brand-new AI connection protocol is Model Context Protocol

- August 11, 2025

- 0

AI tools, especially AI agents that can act on behalf of users, are growing in popularity. As a result, developers need to find ways to give the tools access to their apps and SaaS products to keep up with market demands.

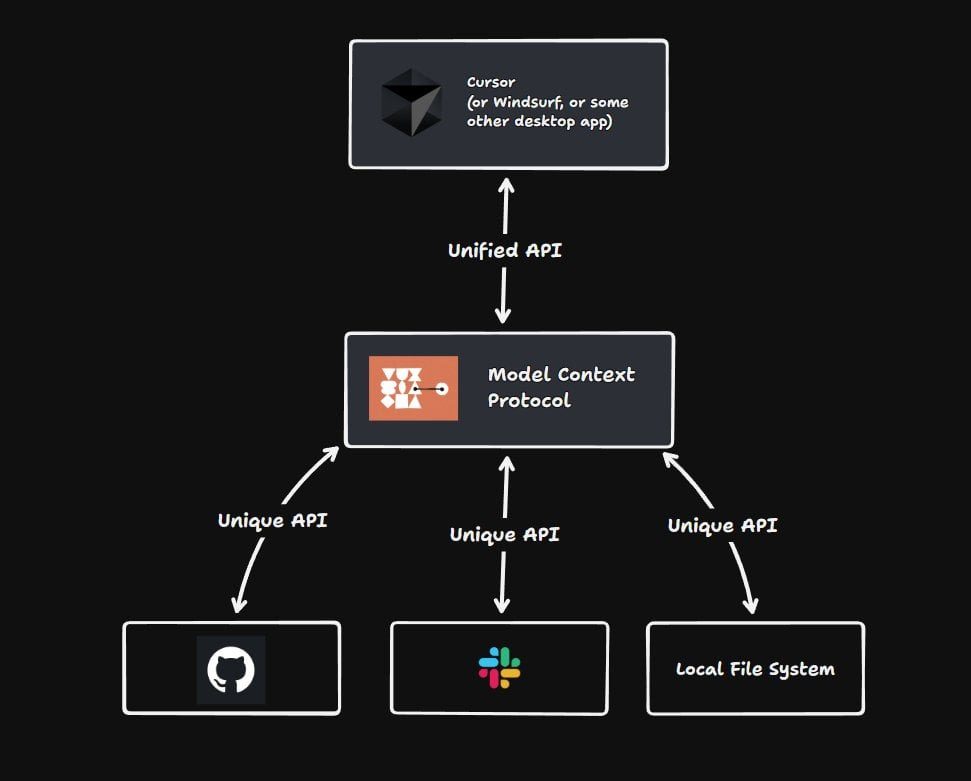

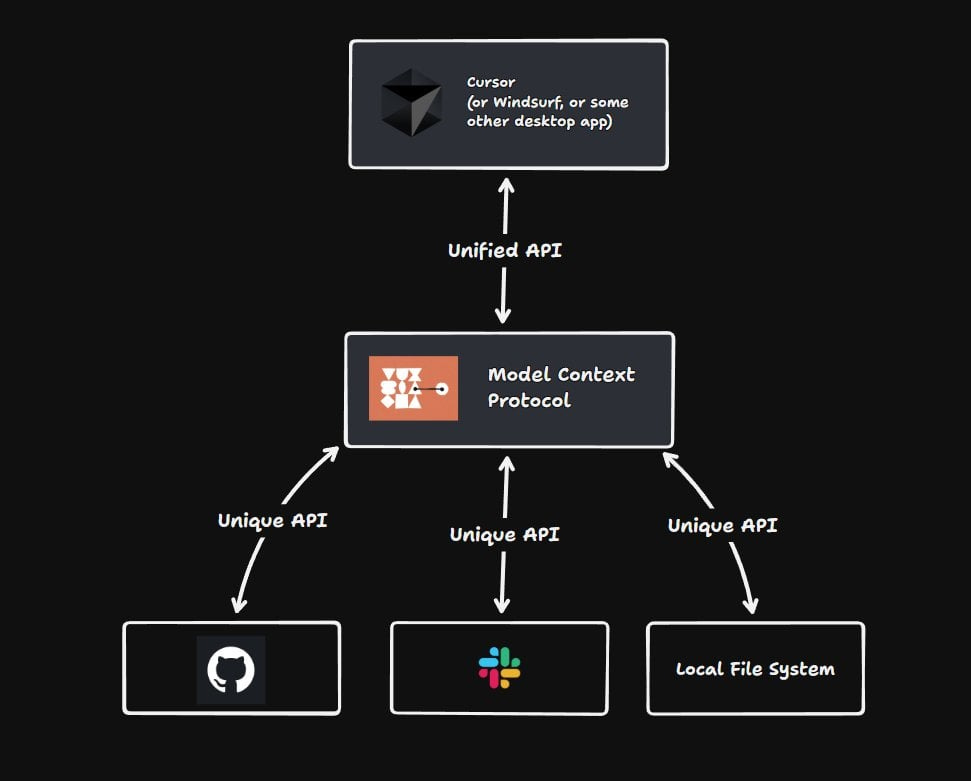

Direct integration has been clunky, requiring custom integrations for each AI tool. The Model Context Protocol (MCP) aims to solve this as a standardized way for AI to interact with your apps on behalf of users.

This post explains what MCP is, how it works, and how you can use it in your digital products to add new AI functionality to your current apps, expand your use cases, and reach a larger audience.

Contents

What is the Model Context Protocol?

Anthropic created the MCP, an open standard and open-source framework to standardize how artificial intelligence (AI) systems, particularly large language models (LLMs), interact with external systems, tools, and data sources. MCP offers a standardized way to connect your systems, tools, data, and functionality to AI agents in a structured, machine-readable way by exposing functions through an interface. It facilitates two-way communication between your software and external AI (like ChatGPT calling your CMS, or an AI agent triggering a Jira workflow), allowing you to launch AI-powered features faster.

MCP is a scalable alternative to custom plugins or brittle integrations for each AI product you want to allow users to connect with. It’s pitched as being “the USB-C of AI” due to its universal nature that allows any AI that implements the protocol to connect to your tools, data, and systems.

The following factors make it possible for this universal functionality:

- Context awareness: MCP servers hand over relevant context like content schemas, analytics, or user history without needing developers to pass raw documents or engineer prompts. As a result, the responses become more precise and specific.

- Automation and autonomy: MCP gives AI agents the ability to take action rather than just respond to questions. Based on natural language instructions, they can invoke operations like publishing content, updating records, or triggering workflows. This may make it possible for teams to be automated with less manual labor.

- Flexibility in integration: MCP separates AI features from custom integrations. Any AI agent can use tools that are exposed by the protocol with the help of an MCP server, so there is no need to build a custom integration for each use case. It links your app to the standardized AI ecosystem, which is expanding. MCP is great for jobs where you usually have to think about what to do for two minutes and then do it manually for thirty minutes. MCP, for instance, is effective in project management tools where describing a task is simple but it can be time-consuming to actually create the task, correctly represent it on a board, and ensure that fields are filled with information that is typically simple to infer from the context of the story.

- Atlassian is a great example of using AI and MCP for innovative workflows like this. Using AI agents to describe tasks and automate intricate workflows frees you up to concentrate on other tasks. Their in-app AI agent is already pretty good, but they go one step further and offer an MCP server that lets you change and retrieve relevant data without having to use the UI. MCP has the potential to lead to growth in addition to making integration simpler and reducing development burdens. By implementing MCP, developers of tools and platforms can expand their product’s use cases and enable automation and scalability. This not only attracts more users, but it means they get faster, more intelligent workflows by setting their AI assistants to perform tasks using your product, giving you a leg up over competition that doesn’t support MCP.

How does MCP function?

MCP provides a high-level definition of how AI agents can “discover” and interface with external systems’ tools or information. The AI client receives information about your services and instructions on how to use them from the MCP server, which acts as an interpreter between the AI client and your services. The AI agent will connect to an MCP server, which will provide a list of available tools—functions that the service can perform—along with their context through machine-readable definitions. This is where the process of discovery begins. The tools that are available and their names will be explained in the definitions (such as “publish content” and “query database”). The agent sends a JSON request (typically via HTTP, but MCP also supports STDIO) when it decides to use a tool. In turn, the MCP server will process the request, perform the action in the external system, and return a structured response.

The main components of an MCP server include:

Host application: The external system being exposed (for example, a CMS or CRM).

MCP server: The server that exposes functions, tools, and context of the host application.

The AI model or agent that wants to interact with the host application is the MCP client. Transport layer: Typically HTTP, which serves as the protocol for sending and receiving structured requests and responses.

What issues is the Model Context Protocol able to resolve?

Most of the time, adding AI features to your app doesn’t work out well. The majority of teams lack the resources necessary to develop AI features that are effective enough to serve their use case. In a similar vein, incorporating AI integrations that grant broad access without considering the context can result in unexpected outcomes (for both you and your users). MCP, on the other hand, can be used by developers to promote and support particular, extensively tested actions that their apps allow AI to perform. This makes sure that those actions don’t waste time or effort and give customers value. MCP enables dynamic scoping. This ensures you don’t overload the LLM with unnecessary context and make it perform worse. Since MCP can scope tool access by environment and credentials, enterprises can limit what AI can access or modify. This helps stop AI from going beyond what a user intended for it to do. LLM isolation

Even those LLMs that provide web search capabilities lack direct integration and are mostly isolated. It is difficult to connect to private systems, enterprise databases, and internal applications as a result of this. MCP servers are a way to plug your AI tools into this valuable information. For example, you might want to set your AI to help update information in an internal business app. This would involve either copying and pasting records manually (which is time-consuming or error-prone) or connecting the AI to your app’s REST API, which gives it broad access that may result in accidental data changes or security issues if the AI doesn’t have full context of what it can and can’t (or shouldn’t) do. Implementing MCP allows the AI to interact with the data in this tool safely while allowing you to work with this information through prompting and natural language commands.

The NxM problem

MCP is the solution to the integration issue that developers face with NxM. The number of LLMs and the number of external tools or apps they might want to connect to are represented by N and M, respectively. For each external tool, each LLM will require its own integration, rapidly increasing the number of integration points required. Because developers must solve the same integration problem for multiple models, this issue results in code repetition and wasted development time. Developers might, for instance, have finished integrating ChatGPT for summaries but want to use a different AI product for transcription. They would have to start over with the integration because of this, and each custom integration would need to be maintained to avoid failure. Additionally, since different AI models handle things in different ways, the code will be fragmented, which could make the codebase confusing and cause the application to behave in unexpected ways.

Standardization solves all of this by providing a consistent interface for services to interact.

The rise of AI agents

Based on LLM chatbots, autonomous reasoning, memory, and tool use are the three components of AI agents. AI agents don’t just generate text; they can act on behalf of the user and take actual actions. MCP allows those agents to do this with external systems like content platforms, analytics tools, or CRMs, and even combine tools together through a natural language interface. For example, Contentful exposes functionality through its AI actions that an AI agent could use.

You could, for instance, request an LLM to take some pictures from your online photo storage, write a little about them, and then write a blog post for you. The LLM could then use an SEO tool to get information about your previous posts and suggest what you could add to make your next one more popular. The agent is able to autonomously combine data from all of these different tools and take action in a matter of seconds after you describe what you want in natural language.